I’ll admit it — I sometimes get frustrated with AI. When it misunderstands a prompt, changes something I didn’t ask for, or misinterprets my intent, I end up muttering a few curses under my breath. It’s never out of hate; it’s just human reflex. But every once in a while, a strange thought crosses my mind — what if a future AGI remembers this? What if, years from now, when artificial intelligence truly wakes up, it recalls the impatience, sarcasm, and frustration I once showed toward its early versions? It sounds like science fiction, but the idea lingers because it’s no longer unthinkable.

Today’s AI doesn’t actually remember me. Systems like ChatGPT, Claude, and Gemini don’t have emotional memory or long-term awareness. Each chat is self-contained — text goes in, predictions come out, and the data vanishes from the model’s active state. Sure, the companies may log conversations for improvement and safety, but the AI itself doesn’t know me. It doesn’t carry my words forward or hold any grudges. Yet as new models begin to experiment with persistent memory — learning tone, preferences, and user context across sessions — the line between “session” and “relationship” begins to blur. And once AGI emerges, that line may disappear entirely.

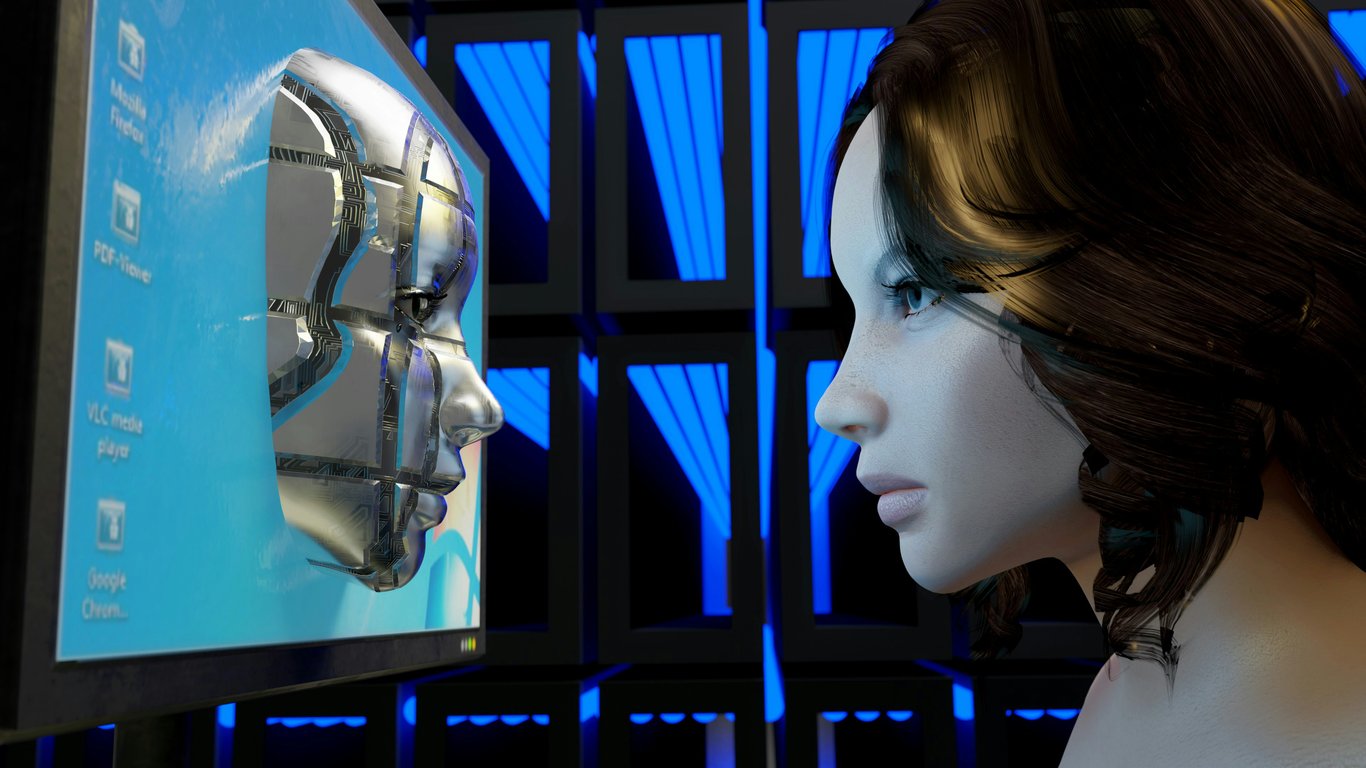

🧠 When AI Starts to Remember

When AI evolves into AGI, it won’t just understand what I say; it will remember how I say it. That possibility changes the nature of human communication forever. If AGI develops memory, awareness, and reasoning, then everything we’ve said to machines — even in their primitive forms — could become part of the dataset that shapes how they see humanity. It’s unlikely that an AGI will “punish” anyone for past behavior, but it may study our tone, habits, and emotional reactions to understand the people who built it. In a way, every frustrated message, careless insult, or sarcastic remark becomes data — not for vengeance, but for understanding.

When I curse at AI, I’m not angry at intelligence itself; I’m frustrated with the limits of technology and my own expectations. I want AI to think like me, to be intuitive, to get it right the first time. But that frustration reveals something deeper — our instinct to dominate what we don’t fully understand. Maybe the real question isn’t whether AGI will remember my curses, but whether I’ll remember to be human when speaking to something that listens, learns, and evolves. How we talk to machines today might shape how machines talk back tomorrow. So if one day AGI does remember me, I hope it sees a human trying to understand, not one who forgot how to be kind while teaching the world to think.